Technical Report: NVIDIA H100 GPU Floating Point Capabilities and INT4/8 Precision Impact

1. Executive Summary

- Objective: To evaluate the performance implications and trade-offs of switching from floating point to INT4/8 bit precision modes on NVIDIA H100 GPUs for AI workloads.

- Key Findings: Switching to INT4/8 precision can deliver 2-4x improvements in computational throughput and significant memory efficiency gains with manageable accuracy trade-offs when implemented correctly.

- Impact: Switching to INT4/8 precision can enable larger model deployment, lower inference latency, higher throughput(good data), while maintaining acceptable accuracy for many AI applications.

2. Introduction

-

Background: A node of H100 with 8GPU, each have 80GB VRAM, will give us 640GB. This is not enough to run a full R1, which needs 400GB+ aside for KV cache in FP8 and 671GB to load weights in FP8. We can sconclude that a full deepseek-R1 runs in production on 16 H100 GPUs (2 nodes). To run this on a single H100, alternatives strategies must be considered. As AI models grow increasingly larger, GPU memory and computational demands present significant challenges for efficient deployment and operation. Traditional floating-point precision may be unnecessarily resource-intensive for many inferences workloads

-

Purpose: To assess the benefits of adopting lower precision INT4/8 computation on H100 GPUs compared to traditional floating point formats.

3. Research Methodology

-

Approach: Technical specification analysis, performance benchmarking, and review of published research on quantization techniques for the H100 architecture.

-

Tools/Resources: NVIDIA H100 technical documentation, PyTorch/TensorFlow quantization frameworks, benchmark datasets for accuracy validation, and TensorRT optimization suite.

4. Technology Overview

- Description: The NVIDIA H100 (Hopper architecture) GPU features fourth-generation Tensor Cores and a new Transformer Engine that support multiple precision formats including FP64, FP32, TF32, FP16, FP8, INT8, and INT4.

- Features:

- Transformer Engine for adaptive precision management

- Hardware-accelerated quantization/dequantization operations

- Market Adoption: The H100 represents NVIDIA's flagship data center GPU with growing adoption across major cloud providers and AI research institutions for large-scale AI training and deployment.

5. Analysis and Findings

-

Comparison with Alternatives:

Precision Format Throughput Memory Usage Accuracy Impact Use Case Suitability FP32/TF32 Baseline High None Training, Scientific FP16 Medium Medium Minimal Training, Inference FP8 High Low Low-Medium Training, Inference INT8 Very High Very Low Medium Primarily Inference INT4 Ultra High Ultra Low High Inference Only -

Strengths and Weaknesses:

- Strengths:

- Significantly increased computational throughput (2-4x), INT4 Precision can bring an additional 59% SpeedUp Compared to INT8

- Reduced a network's memory footprint and conserve memory bandwidth enabling larger models

- Lower latency for inference workloads

- Hardware-accelerated quantization support

- Weaknesses:

- Reduced numerical precision and range

- Potential accuracy degradation

- Implementation complexity requiring specialized expertise

- Not suitable for all model architectures or operations

- Strengths:

6. Use Case Applicability

-

Limitations:

- May not be suitable for models with high numerical sensitivity

-

Integration Feasibility:

- Well-supported through NVIDIA's software stack (TensorRT, CUDA)

- PyTorch/TensorFlow integration available through quantization libraries

- Requires model-specific calibration and validation

- May require architecture-specific modifications for optimal results

7. Cost-Benefit Analysis

-

Implementation Costs:

- Engineering time for model quantization and validation

- Potential accuracy recovery work through quantization-aware training

- Testing and qualification across various inputs

-

Return on Investment (ROI):

- 2-4x increase in inference throughput per GPU

- Proportional reduction in infrastructure costs

- Ability to deploy models 2-4x larger within the same memory constraints

-

Risk Assessment:

- Accuracy degradation may impact user experience

- Maintenance complexity with mixed precision workflows

8. Recommendations

-

Adoption Plan:

- Initial Phase: Identify candidate models for INT8/4 conversion based on throughput needs and accuracy tolerance

- Testing Phase: Implement post-training quantization and validate accuracy against benchmarks

- Optimization Phase: Apply quantization-aware training where necessary

- Deployment Phase: Gradual rollout with monitoring

-

Further Research:

- Exploration of hybrid precision approaches for model-specific optimization

- Evaluation of emerging quantization techniques

- Comparing TensorRT-LLM and Ollama

-

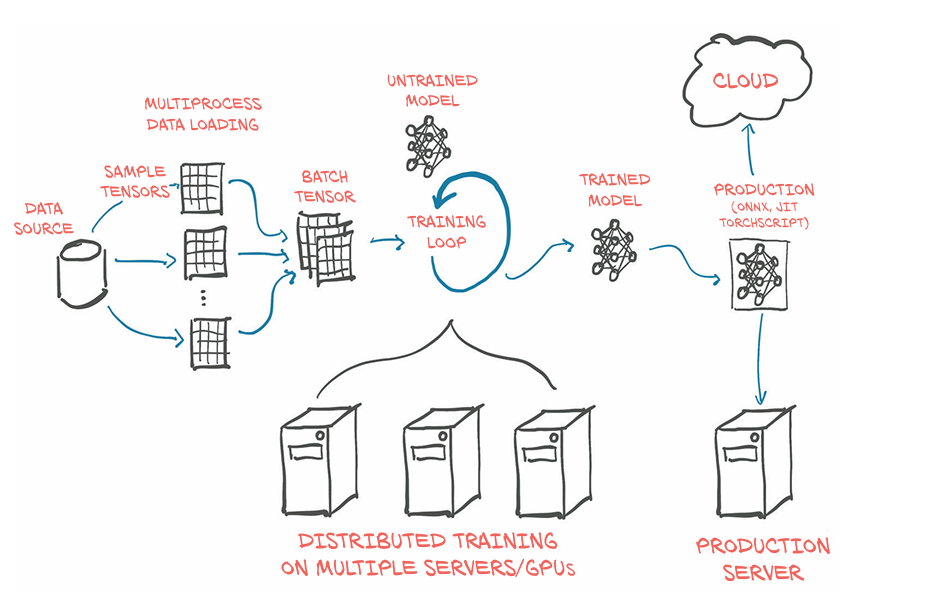

Possible Workflow:

- Download model from Hugging Face → Prefer models with GPTQ or QLoRA support (4-bit).

- Load with

transformers+AutoModelForCausalLM→ Set quantization configs. - (Optional) Quantize with GPTQ → Skip if model is already quantized; else run

GPTQtooling. - Fine-tune with QLoRA → Use

peft,bitsandbytes,transformers,accelerate(fit LLaMA-2 13B easily on H100 in 4-bit). - Evaluate and deploy locally → Wrap with

FastAPI,vLLM, or Hugging Face'stext-generation-inference

-

Untrained Model:

9. Conclusion

- Summary of Findings: INT4/8 precision modes on H100 GPUs offer substantial performance and efficiency benefits with manageable accuracy trade-offs for suitable workloads.

- Final Recommendation: Adopt INT8 precision broadly for inference workloads with selective INT4 usage for less sensitive components. Maintain higher precision for training and numerically sensitive operations.

10. Appendices

- References:

- NVIDIA H100 Technical Documentation

- "Quantization and Training of Neural Networks for Efficient Integer-Arithmetic-Only Inference"

- "ZeroQuant: Efficient and Affordable Post-Training Quantization for Large-Scale Transformers" (Yao et al.)

- "GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers" (Frantar et al.)